Here we present a new and simple operator in basic Calculus. It renders prominent deep learning frameworks and various AI applications more numerically robust. It is further advantageous in continuous domains, where it classifies trends accurately regardless of functions’ differentiability or continuity.

I used to teach my Advanced Calculus students that a function’s momentary trend is inferred from its gradient sign. Years later, in their fraud detection AI research, my employees encounter this notion often. Upon analyzing custom loss functions numerically, we treat momentary trends concisely and efficiently:

Upon implementing algorithms similar to the above, consider the case where we approximate the derivative sign numerically with the difference quotient:

$$sgn\left[\frac{dL}{d\theta}\left(\theta\right)\right]\approx sgn\left[\frac{L\left(\theta+h\right)-L\left(\theta-h\right)}{2h}\right]$$This is useful for debugging your gradient computations, or in case your custom loss function, tailored by domain considerations, isn’t differentiable. The numerical issue with the gradient sign is embodied in the redundant division by a small number. It doesn’t affect the final result, the numerator, $sgn\left[L\left(\theta+h\right)-L\left(\theta-h\right)\right]$. However, it amounts to a logarithmic or linearithmic computation time in the number of digits and occasionally results in an overflow. We’d better avoid it altogether.

Whenever you scrutinize your car’s dashboard, you notice Calculus. The mileage is equivalent to the definite integral of the way you did so far, and the speedometer reflects the derivative of your way with respect to time. Clearly, both physical instruments merely approximate abstract notions.

Your travel direction is evidenced by the gear stick. Often, its matching mathematical concept is the derivative sign. If the car moves forward, in reverse, or freezes, then the derivative is positive, negative or zero respectively. However, calculating the derivative to evaluate its sign is occasionally superfluous. As Aristotle and Newton famously argued, nature does nothing in vain. Following their approach, to define the instantaneous trend of change, we probably needn’t go through rates calculation. Put simply: If the trend of change is a basic term in processes analysis, then perhaps we ought to reflect it concisely rather than as a by-product of the derivative?

This occasional superfluousness of the derivative causes the aforementioned issues in numeric and analytic trend classification tasks. To tackle them, we’ll attempt to simplify the derivative sign as follows:$$ \require{color}\begin{align*}

sgn\left[f_{\pm}’\left(x\right)\right] & =sgn\underset{ {\scriptscriptstyle h\rightarrow0^{\pm}}}{\lim}\left[\frac{f\left(x+h\right)-f\left(x\right)}{h}\right]\\

& \colorbox{yellow}{$\texttip{\neq}{Apply suspension of disbelief: this deliberate illegal transition contributes to the below discussion }$}\underset{ {\scriptscriptstyle h\rightarrow0^{\pm}}}{\lim}sgn\left[\frac{f\left(x+h\right)-f\left(x\right)}{h}\right]\\

& =\pm\underset{ {\scriptscriptstyle h\rightarrow0^{\pm}}}{\lim}sgn\left[f\left(x+h\right)-f\left(x\right)\right]

\end{align*}$$Note the deliberate erroneous transition in the second line. Switching the limit and the sign operators is wrong because the sign function is discontinuous at zero. Therefore the resulting operator, the limit of the sign of $\Delta y$, doesn’t always agree with the derivative sign. Further, the multiplicative nature of the sign function allows us to cancel out the division operation. Those facts may turn out in our favor, because of the issues we saw earlier with the derivative sign. Perhaps it’s worth scrutinizing the limit of the change’s sign in trend classification tasks?

This novel trend definition methodology is similar to that of the derivative. In the latter, the slope of a secant turns into a tangent as the points approach each other. In contrast, the former calculates the trend of change in an interval surrounding the point at stake, and from it deduce, by applying the limit process, the momentary trend of change. Feel free to gain intuition by hovering over the following diagram:

Clearly, the numerical approximations of both the (right-) derivative sign and that of $\underset{ {\scriptscriptstyle h\rightarrow0^{+}}}{\lim}sgn\left[f\left(x+h\right)-f\left(x\right)\right]$ equal the sign of the finite difference operator, $sgn\left[f\left(x+h\right)-f\left(x\right)\right]$, for some small value of $h$. However, the sign of the difference quotient goes through a redundant division by $h$. This amounts to an extra logarithmic- or linearithmic-time division computation (depending on the precision), and might result in an overflow, since $h$ is small. In that sense, we find it lucrative to think of trends approximations as $\underset{ {\scriptscriptstyle h\rightarrow0^{\pm}}}{\lim}sgn\left[f\left(x+h\right)-f\left(x\right)\right]$, rather than the derivative sign. Similar considerations lead us to apply the quantization directly to $\Delta y$ when we’re after a generic derivative quantization rather than its sign. That is, instead of quantizing the derivative, we calculate $Q\left[f\left(x+h\right)-f\left(x\right)\right]$ where $Q$ is a custom quantization function. In contrast with the trend operator, this quantization doesn’t preserve the derivative value upon skipping the division operation. However, this technique is good enough in algorithms such as gradient descent. Where the algorithmic framework entails multiplying the gradient by a constant (e.g., the learning rate), we may spare the division by $h$ in each iteration, and embody it in the pre-calculated constant itself.

A coarse estimation of the percentage of computational time spared can be achieved by considering the computations other than the trend analysis operator itself. For example, say we are able to spare a single division operation in each iteration of gradient descent. If the loss function is simple, say whose calculation is equivalent to two division operations, then we were able to spare a third of the required calculations in the process. If however the loss function is more computationally involved, for example one that includes logical operators, then the merit of sparing a division operator would be humbler.

Given this operator’s practical merit in discrete domains, let’s proceed with theoretical aspects in continuous ones.

As we’ve seen, in all those cases, $\underset{\Delta x\rightarrow0^{+}}{\lim}sgn\left(\Delta y\right)$ reflects the way we think about the trend: it always equals $a$ except for the constancy case, where it’s zeroed, as expected. It’s possible to show it directly with limit Calculus, see some examples below. We also concluded in the introductory section that the derivative sign doesn’t capture momentary trends except for $k\in \left\{0,1\right\}$. We gather that this operator does better in capturing trends at critical points.

We can establish a more rigorous justification by noticing how the definition of local extrema points coalesces with that of the operator at stake. In contrast with its basic Calculus analog, the following claim provides both a sufficient and necessary condition for stationary points:

Theorem 1. Analog to Fermat’s Stationary Point Theorem. Let $f:\left(a,b\right)\rightarrow\mathbb{R}$ be a function and let $x\in \left(a,b\right).$ The following condition is necessary and sufficient for $x$ to be a strict local extremum of $f$:

$$\exists \underset{h\rightarrow0}{\lim}sgn\left[f\left(x+h\right)-f\left(x\right)\right]\neq0.$$

The avid Calculus reader will notice that this theorem immediately follows from the Cauchy limit definition.

Feel free to scrutinize the relation between the Semi-discrete and the continuous versions of Fermat’s theorem in the following animation:

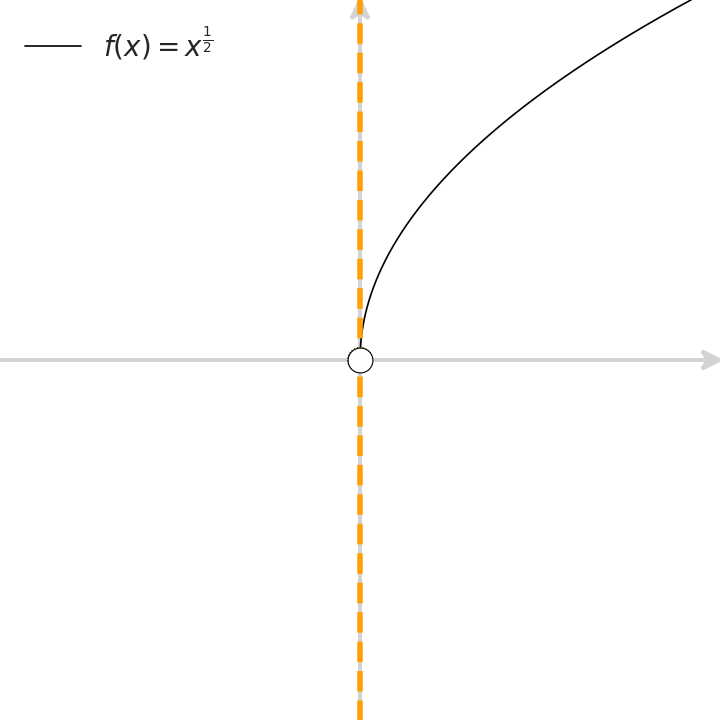

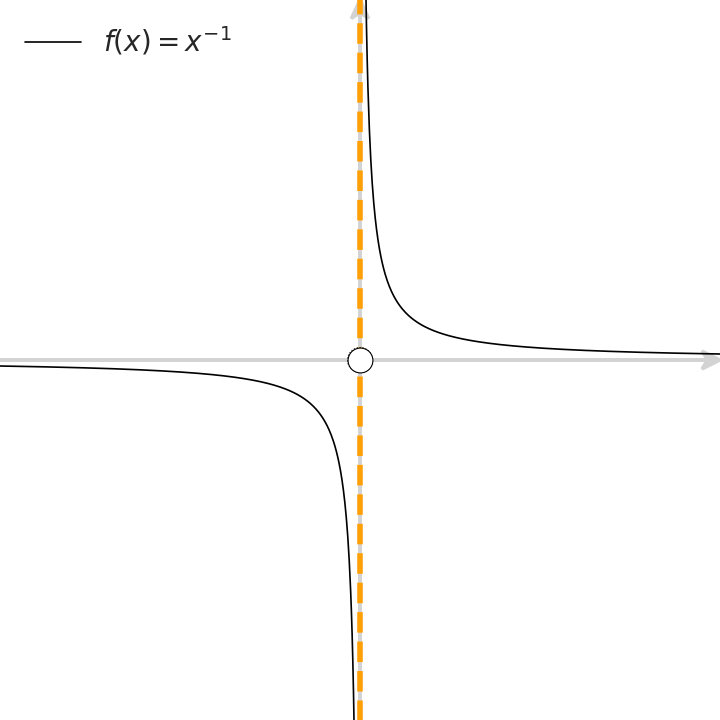

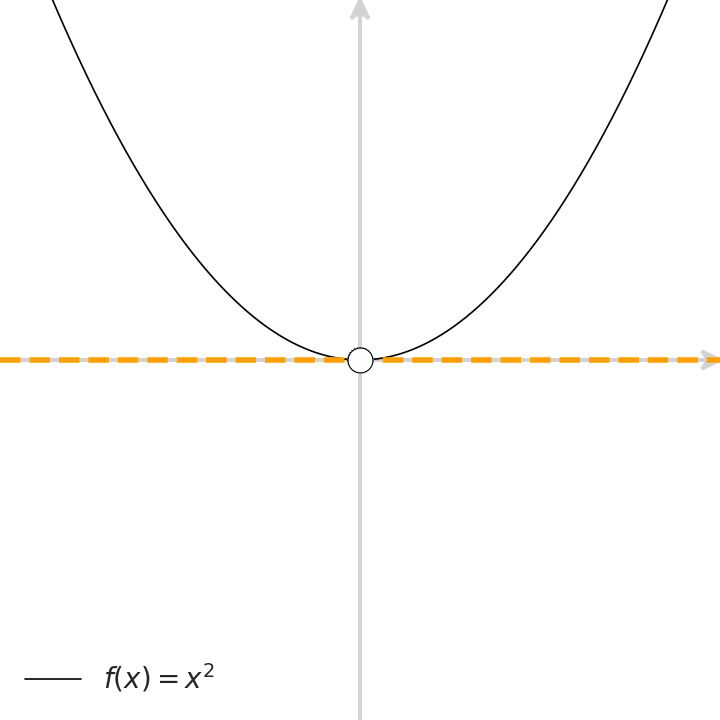

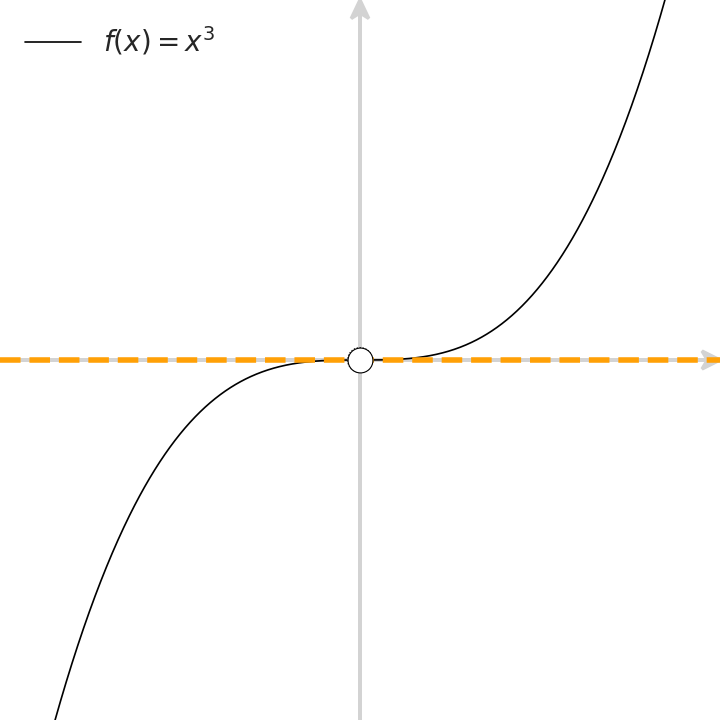

Next, let’s check in which scenarios is this operator well defined. We’ll cherry-pick functions with different characteristics around $x=0$. For each such function, we ask which of the properties (continuity, differentiability, and the existence of the operator at stake, that is, the existence of a local trend from both sides), take place at $x=0$. Scrutinize the following animation to gain intuition with some basic examples:

We would also like to find out which of those properties hold across an entire interval (for example, $[-1,1]$). To that end, we add two interval-related properties: Lebesgue and Riemann integrability. Feel free to explore those properties in the following widget, where we introduce slightly more involved examples than in the above animation. Switch between the granularity levels, and hover over the sections or click on the functions in the table, to confirm which conditions hold at each case:

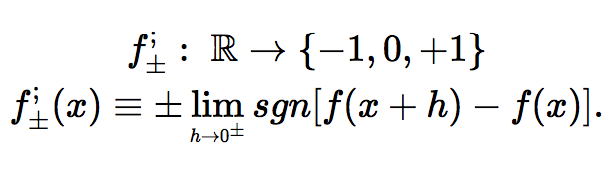

Let’s summarize our discussion thus far. The momentary trend, a basic analytical concept, has been embodied by the derivative sign for engineering purposes. It’s applied to constitutive numeric algorithms across AI, optimization and other computerized applications. More often than not, it doesn’t capture the momentary trend of change at critical points. In contrast, $\underset{\Delta x\rightarrow0^{\pm}}{\lim}sgn\left(\Delta y\right)$ is more numerically robust, in terms of finite differences. It defines trends coherently wherever they exist, including at critical points. Given those merits, why don’t we dedicate a definition to this operator? As it “detaches” functions, turning them into step functions with discontinuities at extrema points, let’s define the one-sided detachments of a function $f$ as follows:

Geometrically speaking, for a function’s (right-) detachment to equal $+1$ for example, its value at the point needs to strictly bound the function’s values in a right-neighborhood of $x$ from below. This is in contrast with the derivative’s sign, where the assumption on the existence of an ascending tangent is made.

Feel free to scrutinize the logical steps that led to the definition of the detachment from the derivative sign in the following animation (created with Manim):

Equipped with a concise definition of the instantaneous trend of change, we may formulate analogs to Calculus theorems with the following trade-off. Those simple corollaries inform us of the function’s trend rather than its rate. In return, they hold for a broad set of detachable and non-differentiable functions. They are also outlined in [31].